AI ≠ Just LLMs

- Rick Pollick

- Jul 15, 2025

- 4 min read

Updated: Jul 16, 2025

Why AI Doesn’t Always Mean Large Language Models

Artificial Intelligence (AI) is everywhere these days. From chatbots and image generators to recommendation systems and autonomous vehicles, it’s tempting to reduce AI to one flashy idea: large language models (LLMs) like ChatGPT or Claude.

But here’s the truth: AI isn’t just about language models.

In fact, many of the smartest, most useful AI systems today don’t involve neural networks at all. They’re built using logic, decision trees, structured workflows, and deterministic rules. They process input, make decisions, and provide value—without ever generating a single token of “human-like” language.

This isn’t theoretical. It’s how we built the Company Regulatory Compliance Intelligence Platform—a sophisticated application used in the pharmaceutical industry to monitor and interpret regulatory changes.

Let’s explore what AI really means, how logic-based design can drive intelligent apps, and why not using an LLM is often the smartest path forward.

AI ≠ Just LLMs

Artificial Intelligence is broadly defined as the simulation of human intelligence in machines. That simulation can take many forms, including:

Rules-based systems

Search algorithms

Predictive models

Heuristics

Neural networks (like LLMs)

LLMs are just one narrow (though powerful) category. For most enterprise use cases—especially where stability, cost, and explainability matter—logic-driven AI still rules.

“Much of the value in AI today comes not from generative models, but from decision systems that use structured logic to automate well-defined processes.”— O’Reilly Media, Practical AI Architectures (2022)

Case Study: Building a Smart Regulatory Intelligence App Without an LLM

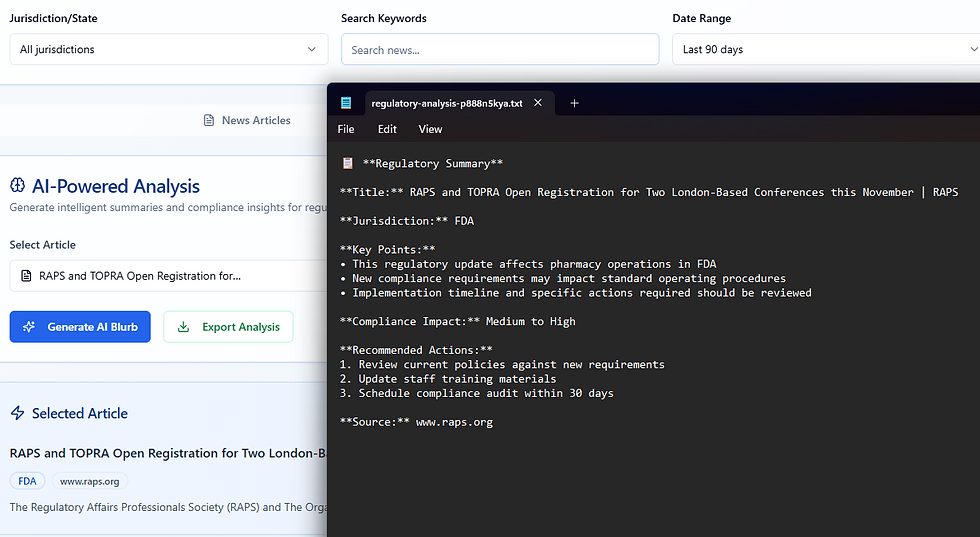

I built a powerful compliance monitoring platform for the pharmaceutical space. It aggregates and analyzes regulatory content from multiple sources and provides intelligent sorting, filtering, and analysis—all without relying on generative AI.

Here’s how it works.

Core Functionality

1. Web Crawling Engine

Using the @mendable/firecrawl-js library, the app collects structured content from regulatory websites:

The Firecrawl service extracts titles, bodies, metadata, and publication dates.

Smart Processing Pipeline

A custom useNewsProcessor hook applies multiple AI-like layers:

Content extraction from HTML or markdown

Jurisdiction detection (federal vs. state)

Keyword extraction for compliance terms

Relevance scoring based on term frequency

This pipeline mimics human analysis—but it’s deterministic and code-driven.

3. Advanced Filtering and Sorting

Users can filter and sort by:

Jurisdiction (e.g. FDA, CMS, or specific states)

Date ranges (last 30, 60, 90 days)

Keywords in title or content

Source agency or department

It’s fast, intuitive, and accurate—and every piece is based on structured logic, not stochastic modeling.

AI Without LLMs: Simulated Summaries

One of the app’s most useful modules is the AI Analyzer. This feature creates executive summaries, risk profiles, and compliance recommendations based on selected articles.

Currently, this system is LLM-free, and built using:

Rule-based templates

Conditional logic

Prewritten summary structures

Example:

tsCopyEditif (article.content.includes("Schedule II")) { summary = "This regulation may affect controlled substances under Schedule II classification."; }This feels intelligent, and it is. But it's logic-based, not generative. LLMs can eventually enhance this—by rephrasing, adding nuance, or tailoring to tone—but the intelligence already exists through smart design.

Why I Chose Logic-Based AI First

Cost Efficiency

LLMs charge by usage (tokens in/out). At scale—hundreds of documents a week—this adds up fast. Our logic-first implementation avoids those per-call costs.

Determinism and Auditability

Rule-based systems offer transparency, ideal for compliance-heavy industries.

Speed and Control

Logic-based filtering and analysis is fast, consistent, and doesn’t require external API calls, making the user experience smoother and more reliable.

Smart Design = Real AI

A huge part of AI-driven development is designing workflows that feel intelligent—even without an underlying model. The Regulatory Intelligence Platform was built with smart logic in mind at every layer.

Here’s the component architecture:

Component Structure (Mermaid Diagram)

mermaidCopyEditgraph TD

A[Index Page] --> B[Header]

A --> C[CrawlForm]

A --> D[NewsAggregator]

C --> E[FirecrawlService]

D --> F[NewsFilters] D --> G[NewsCard]

D --> H[AIAnalyzer]

D --> I[useNewsProcessor Hook]

F --> J[Jurisdiction Filter]

F --> K[Search Filter]

F --> L[Date Range Filter]

F --> M[Sort Options]

H --> N[Article Selection]

H --> O[Analysis Generation]

H --> P[Export Functionality]Each component is modular, reusable, and logic-aware, meaning intelligent behavior is embedded throughout—not bolted on.

Technical Stack Highlights

Frontend: React 18 + TypeScript + Vite

Styling: Tailwind CSS + Shadcn/UI

Form Validation: React Hook Form + Zod

API Services: Firecrawl for structured web scraping

State Management: React Query and native state

Feedback/UX: Sonner for toast notifications

Data Flow Architecture

textCopyEdit1. Crawling Phase: User selects sites → FirecrawlService scrapes data → Articles stored in local state

2. Processing Phase: useNewsProcessor analyzes articles → Detects keywords/jurisdictions

3. Display Phase: NewsAggregator renders NewsCards → Filters applied live

4. Analysis Phase: Selected articles passed to AIAnalyzer → Risk summaries generated → ExportableHow This Approach Applies Elsewhere

Logic-first AI is everywhere:

Monday.com uses automation recipes (no LLM) to route tasks

Trello has Butler rules that simulate intelligent decision-making

Zendesk routes tickets based on keywords and historical resolution patterns

TurboTax builds AI flows around tax decisions without generative language

These systems are intelligent—just not generative. That’s the distinction that matters.

LLMs as Enhancers, Not Foundations

While our app runs entirely without an LLM, the architecture supports easy integration later. Some ideas include:

Converting summaries into different tones (e.g., executive vs. technical)

Explaining regulatory language in plain English

Offering chat-style Q&A over filtered data

But by focusing first on smart structure, we’ve future-proofed the platform—LLMs become optional upgrades, not structural dependencies.

“LLMs are best used to augment structured systems—not replace them.”— Jurafsky & Martin, Speech and Language Processing (2023)

Final Thoughts: Build Smart Before You Build Big

The hype around generative AI is warranted—but not everything intelligent needs to be predictive or generative.

At its core, the Regulatory Compliance Intelligence Platform succeeds because of smart, logic-first design. It mimics how a regulatory analyst thinks. It’s fast, explainable, and efficient. And it’s ready to scale.

So next time someone says, “Let’s make it AI-powered,” ask:

“Are we solving a structured problem? And if so—do we really need an LLM?”

References

Russell, S., & Norvig, P. (2021). Artificial Intelligence: A Modern Approach. Pearson.

Jurafsky, D., & Martin, J. H. (2023). Speech and Language Processing (3rd ed.). Stanford University.

Lorica, B. (2024). The Gradient – Designing for Intelligence.

O’Reilly Media. (2022). Practical AI Architectures.